Sentence Similarity and Semantic Search using free Huggingface Embedding API

Sentence similarity involves determining the likeness between two texts. The idea behind semantic search is to embed all entries in your corpus, whether sentences, paragraphs, or documents, into a vector space. The query is embedded into the same vector space at search time, and the closest embeddings from your corpus are found.

Some applications of sentence similarity include question answering, passage retrieval, paraphrase matching, duplicate question retrieval, and semantic search.

In this article, we will explore semantic search. The application uses sentence similarity to implement a document search on a Medium blog article. The input to the application will be a question/sentence, and the output will be a set of sentences containing semantically similar content to the input sentence.

The Sentence Transformers library

The Sentence Transformers library is open-source for creating state-of-the-art embeddings from text and computing sentence similarity.

Hugging Face offers a free Serverless Inference API to provide on-demand predictions from over 100,000 models deployed on the Hugging Face Hub.

Inference Endpoints provide support for operations offered by the following libraries: -

- Transformers

- Sentence-Transformers, and

- Diffusers (for the Text To Image task).

We have the option to choose any model from the **Sentence Transformers library by Huggingface Model Hun.** The sentence transformer models are of two categories

We will use the Sentence Embeddings feature extraction provided by the Sentence-Transformers library. There are two categories of sentence transformer models: Bi-Encoder (Retrieval) and Cross-Encoder (Re-Ranker).

- The Bi-encoder independently embeds the sentences and search queries into a vector space. The result is then passed to the cross-encoder to check the relevance/similarity between the query and sentences.

- A Cross-Encoder, based on a Cross-Encoder, can substantially improve the user's final results. The query and a possible document are simultaneously passed to the transformer network, which then outputs a single score between 0 and 1 indicating how relevant the document is for the given query. The Cross-Encoder further boosts performance, especially when searching over a corpus for which the bi-encoder was not trained.

In this article, we will explore the bi-encoder-based model sentence-transformers/all-MiniLM-L6-v2.

- The model has 22.7 million parameters, and it can map sentences and paragraphs to a 384-dimensional dense vector space. It is designed for tasks such as clustering or semantic search.

- This model is meant to be used as a sentence and short paragraph encoder. When given an input text, it produces a vector that captures the semantic information. The sentence vector can be used for information retrieval, clustering, or sentence similarity assessment. By default, input text longer than 256 word pieces is truncated.

import requests

model_id = "sentence-transformers/all-MiniLM-L6-v2"

API_TOKEN = "xxxxxxxxxxxxxxxxxxx"

api_url = f"<https://api-inference.huggingface.co/pipeline/feature-extraction/{model_id}>"

headers = {"Authorization": f"Bearer {API_TOKEN}"}

def query(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

data = query("Can you please let us know more details about your ")

In this article, we will utilize the langchain framework. We will precompute the embeddings using the Hugging Face endpoint and then create a vector store using chromaDB. We will then retrieve the top-k chunks of the article that match the embedding of the input question.

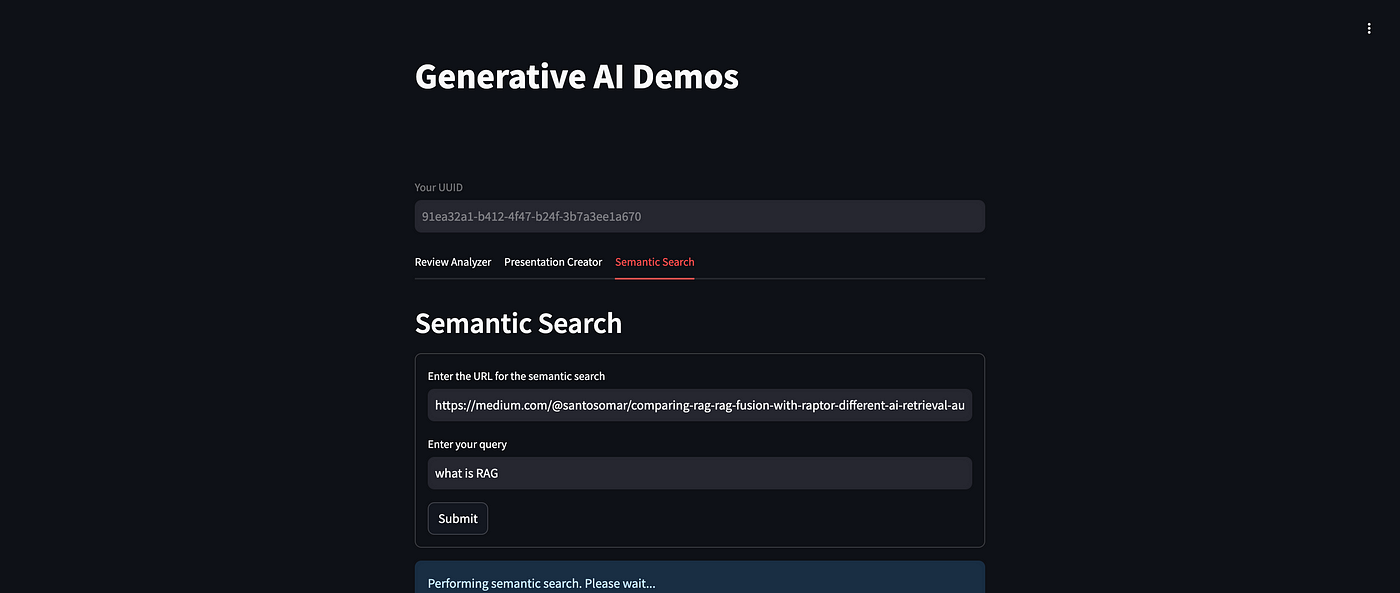

Below is a link to the demo application

https://huggingface.co/spaces/pi19404/reviewAnalyzerDemo

navigate to → Semantic Search

- Enter the URL

- Enter the question

- Click on Submit

The application

- will read the contents of a blog article in plain text.

- It will then split the document into chunks of length 256 words, with an overlapping text of 50 words.

- Next, it will encode the document and store the embeddings in a local cache for that document. When given an input query, it will return the top K sections of text from the blog article.

In future articles, we will explore the following features:

- Providing answers instead of top-K query search results (RAG)

- Ability to have continuous conversations with context

- Ability to have conversations with a knowledge base consisting of multiple blog articles

- Incorporating different types of RAG frameworks for more contextual conversations

Comments

Post a Comment